The Evolution of Image Synthesis: Introducing DALL-E 3

As someone who uses Midjourney for image generation, I was very impressed with the preview I saw of DALL-E 3 released on Sam Altman’s Twitter/X account (I embedded it below this article). It may be soon time to go all in with OpenAI as I really liked the integration with ChatGPT, my preferred Chatbot.

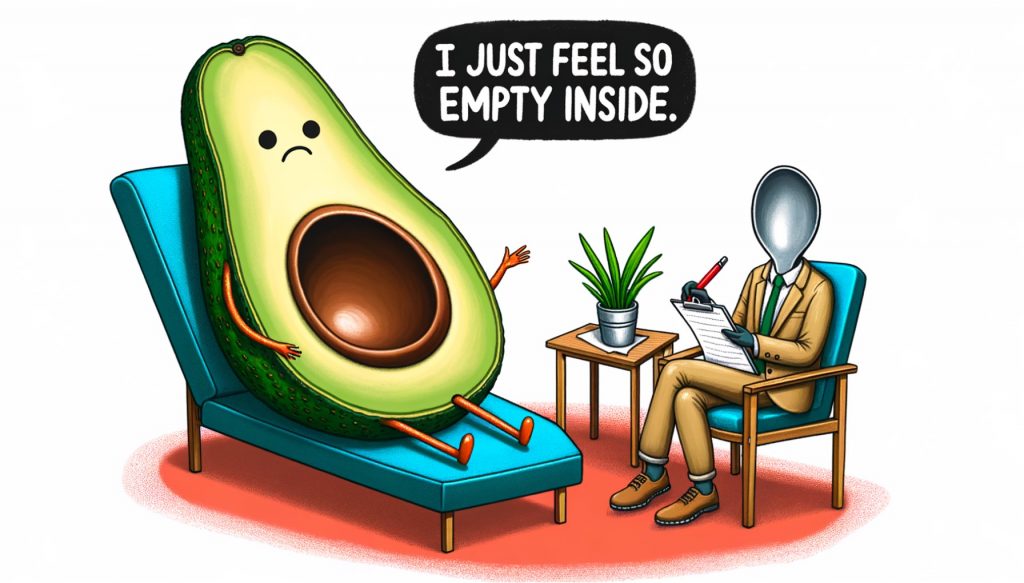

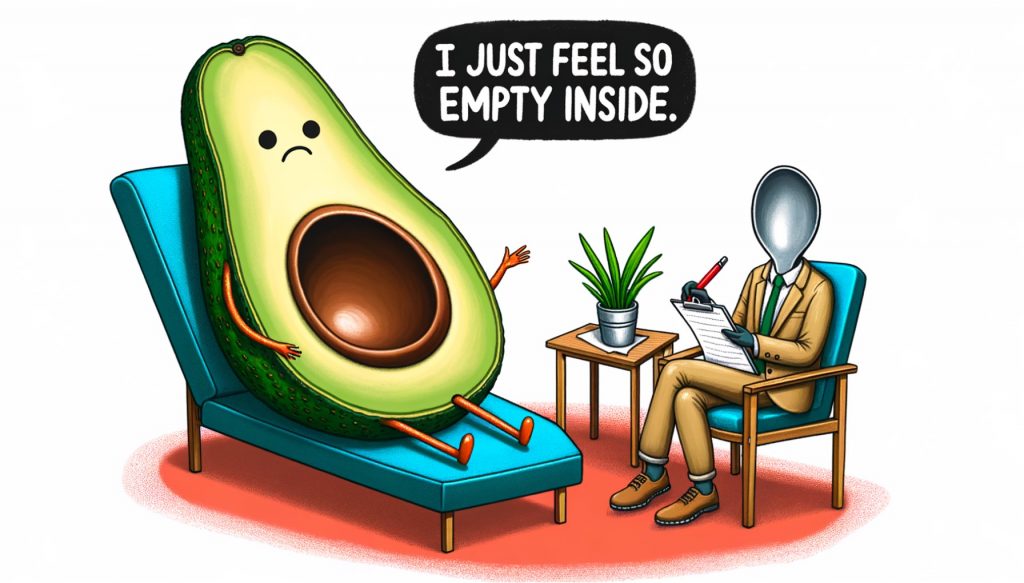

Sample Image provided by OPENAI featuring the speech bubble

A New Era of Image Generation

In this rapidly advancing world of artificial intelligence, OpenAI seems to have achieved another milestone. On Wednesday, the tech giant announced the unveiling of DALL-E 3, its most sophisticated AI image-synthesis model to date. Here’s a comprehensive look at what DALL-E 3 brings to the table, how it compares to its predecessors, and the profound implications of its capabilities.

Breaking New Ground

The unique feature of DALL-E 3 is its full integration with ChatGPT, OpenAI’s prominent chatbot model. DALL-E 3 excels in interpreting intricate descriptions and generating in-image text, such as labels and signs — an area that posed challenges for earlier models.

If DALL-E 2 was a marvel, DALL-E 3 seems poised to redefine the standards of image synthesis. While specific technical details remain undisclosed, it’s inferred from past practices that DALL-E 3, like its predecessors, may have been trained on millions of images from human photographers and artists. Some of these images could potentially be sourced from renowned stock websites like Shutterstock. The real magic, however, may come from enhanced training techniques and prolonged computational training durations.

Visualizing the Progress

Samples provided by OpenAI suggest that DALL-E 3 has taken a quantum leap in terms of prompt-following capability. The promotional images, while cherry-picked for their effectiveness, paint a picture of a highly advanced model. OpenAI highlights the dramatic improvements in DALL-E 3’s rendering capabilities, especially in terms of minor details. For instance, DALL-E 3 can better refine intricate details, such as hands, producing eye-catching images without resorting to any workaround or ‘prompt engineering’.

Competitive Landscape

DALL-E 3’s impressive capabilities overshadow even formidable competitors in the AI image-synthesis space. While Midjourney, my current preferred AI model, manages to produce photorealistic details, it often necessitates intricate tweaking of prompts for effective output. On the other hand, DALL-E 3 seamlessly handles in-image text, a capability its predecessor lacked. As an illustration, DALL-E 3 flawlessly encapsulated a character quote in a speech bubble, something that remained elusive in image generation up until now .

More Than Just a Synthesizer

DALL-E 3’s integration with ChatGPT heralds a new era in AI capabilities. It has been built “natively” on ChatGPT, which means it will debut as an inherent feature of ChatGPT Plus. This integration suggests a future where AI-driven conversations could be visually enhanced, providing a holistic interaction experience. Imagine having a brainstorming session with your AI assistant, where it generates images in real-time based on the conversation’s context. This concept isn’t far-fetched, given that Microsoft’s Bing Chat AI assistant, which leverages OpenAI’s technology, has showcased such capabilities since March.

Conclusion

The unveiling of DALL-E 3 signifies a monumental step forward in the domain of AI-driven image synthesis. As AI continues its march towards unparalleled realism and accuracy, it’s clear that models like DALL-E 3 will play a pivotal role in shaping our digital future. As we anticipate its availability to ChatGPT Plus and Enterprise customers in the coming month, it’s evident that the possibilities are endless and only bound by our imagination.

also, the video we made for dalle 3 is SO CUTE: pic.twitter.com/k1FOFTOsU5

— Sam Altman (@sama) September 20, 2023